Color theory readings

What resources can be found online and in libraries to help understand some color theory ?

What resources can be found online and in libraries to help understand some color theory ?

This page addresses most of the mistakes and misconceptions about Ansel that can be found online.

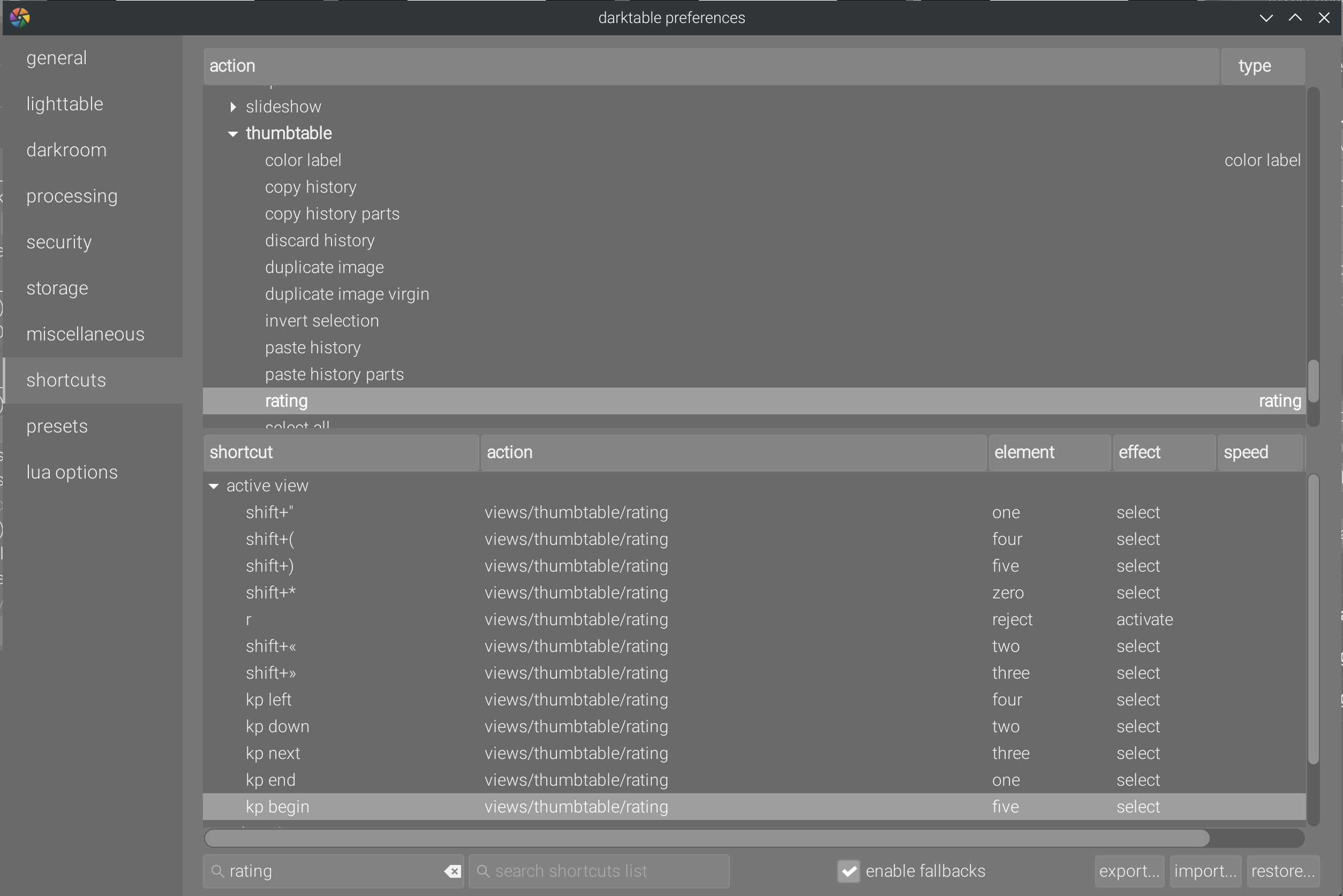

In my defining post, Darktable: crashing into the wall in slow-motion, I presented the trainwreck that the new “Great MIDI turducken” was. The purpose of this turducken1 was to rewrite the keyboard shortcuts system to extend it for MIDI devices.

To this day, I’m still mad about this enterprise of mass-destruction, here is a recap of he the reasons:

if/switch-case statements nested on 4 levels, in the middle of 1000-lines functions (I posted example snippets in my

article),Keypad End,Shift+&, or Shift+" on BÉPO,

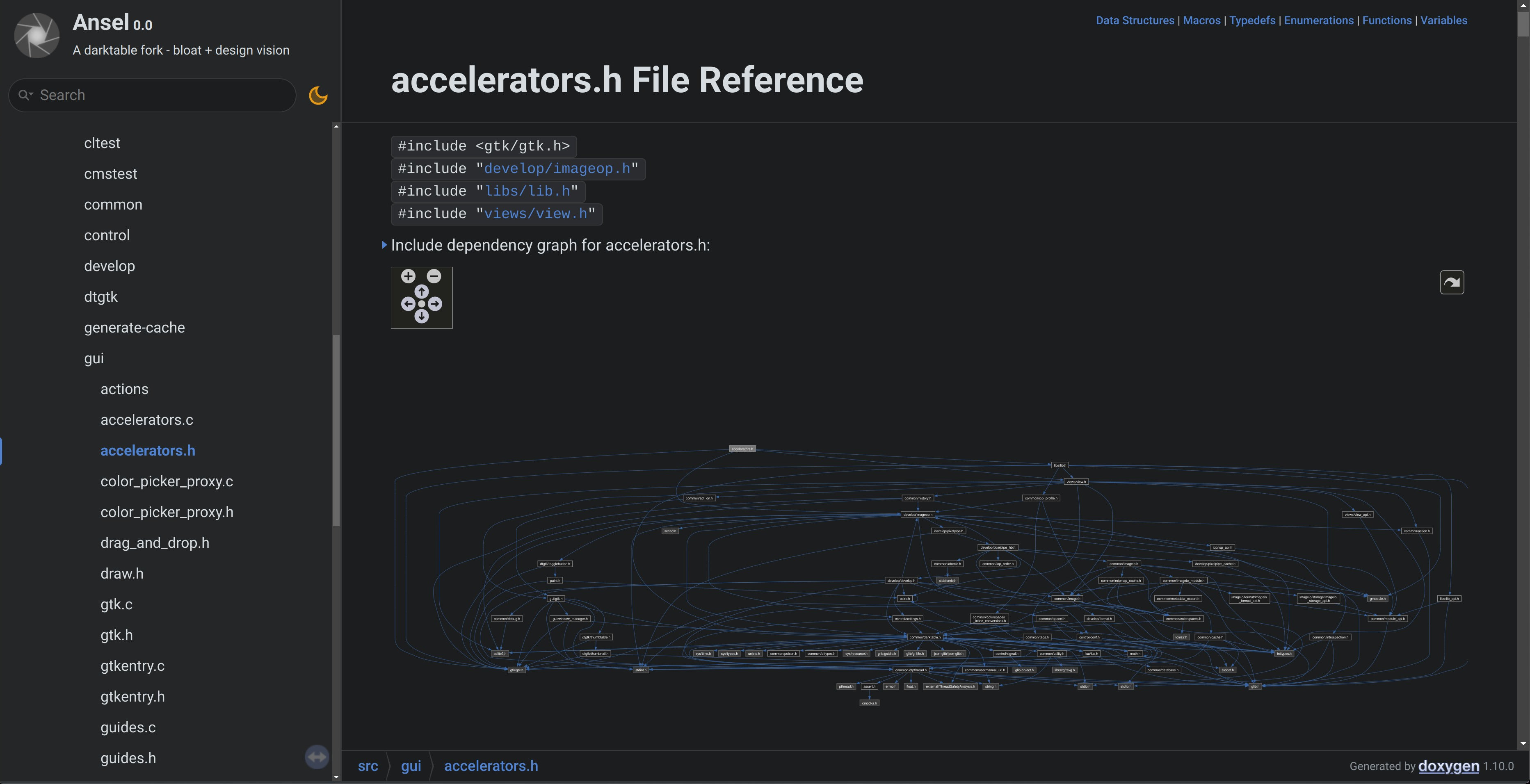

The dependency graph of src/gui/accelerators.c (Great MIDI turducken) before the rewrite. Guess why we call it “spaghetti code "… This makes it clear that there is a double-sided dependency between the accels code and the rest of the GUI code. This is a nightmare to maintain.

After I finally wired the whole website and docs to a water-tight translation workflow (using po4a on top of Hugo), which happens to use the exact same toolset and logic as the Ansel application, I got the idea of automating empty translations, first from the software translation files, then through ChatGPT API, which does a very fair job at translating Markdown syntax.

Working alone, you can’t rely on social loafing , so you have to be clever. You can see the list of things I have already automated in background for Ansel.

Alain Oguse learned photographic printing with Claudine and Jean-Pierre Sudre in the late 1960’s, and spent his early career in commercial photography. After retiring, he started to investigate how to bring back the photographic (silver halide) grain in digital scans of film negatives, finding the same sharpness and quality he had with near-point light enlargers in the 1970’s.

The point light printing technique uses a very tiny source of light that gives a very precise and detailed reproduction of B&W film negatives, as opposed to diffuse lighting. It is very demanding, as its unforgiving sharpness and contrast do not hide scratches and dust on the film surface. Prints done this way would often need manual (painted) corrections on paper, inducing more work and more costs. By the end of the 1970’s, it was usually replaced by diffuse light… better at hiding manipulation mistakes and at maximizing print labs profits.

Back in December 2019, I asked that someone took care of providing AppImages packages for Darktable. The obvious benefit would have been enabling early testing, prior to release, from people who can’t build the source code themselves, as to hopefully provide early feedback and help debugging before releasing. This has never been a priority, which means that it was ok to have a pre-release and a post-release rush to fix bugs.

We all know what “white” is. We can picture a white sheet of paper. We know it is white because we learned it. But if you put your paper sheet under a summer sun, or a cloudy sky, or at home with those warm living-room bulbs, that white will change color. It might disturb you for the first few seconds, then you will just forget about it: your brain will adapt. But adapt (to) what ?

This will apply to upstream Darktable as to Ansel since they share most of their color pipeline. The following procedure will help you troubleshoot your color issues, whether it is inconsistent appearance between export vs. preview, or between screen vs. print, or between 2 apps.

The inherent problem of color is it exists only as a perception, and that perception is highly contextual and fluid. If I take any color patch and display it over a white, middle-grey or black background, it will not appear the same even though a colorimeter would confirm it’s the exact same color. I have built a little web animation to showcase this effect, displaying sRGB gamut slices at constant hue, over user-defined background lightness : the sRGB book of color .

…

Ansel inherits from Darktable its database backbone: the non-destructive editing histories are saved per-picture into an SQLite database, along with metadata and other user-defined data. Making the database aware of new pictures is done through “importing” pictures from a disk or a memory card. That’s where the import tool comes.

Unfortunately, the Darktable importer is another thing that was butchered circa 2020 and turned into something deeply disconcerning, as it is a file browser that resembles no previously-known file browser, and manages to lack basic features (like Ctrl+F or EXIF preview) while still being bloated with useless ones (see below). This is where we loose many a future user, and it is only step 0 of the workflow. What a great showcase of what a “workflow app” can do !

mindmap

root((COLOR))

color appearance model

uniform color space

chromaticity

U, V

a, b

lightness

L

delta E

chromatic adaptation transform

illuminant

color reproduction index

color temperature

surround lighting

background lightness

dimensions

Munsell

hue

chroma

value

natural color system

blackness

saturation

hue

CIE

lightness

brightness

saturation

chroma

colorfulness

hue

measure

colorimetry

tristimulus

sensor

Luther-Ives criterion

metamerism

dynamic range

noise

mosaicing

Bayer

XTrans

zipper artifacts

spaces

rgb(RGB)

HSV

HSL

LMS

Yrg

XYZ

Yxy

Yuv

Ych

CYM

CYMK

primaries

cone cells

LED

ITU BT.Rec 709

ITU BT.Rec 2020

DCI P3

inks

spectrometry

light spectrum

wavelengths

energy

photometry

luminance

correction

profile

matrix

lookup table

transfert function

color grading

ASC CDL

channel mixer

curves

white balance

I have thought, for a very long time, that there was some kill-switch mechanism on the pixel pipeline. The use case is the following :

In that case, you want to kill all active pipelines because their output will not be used, and start recomputing everything immediately with new parameters. Except Darktable doesn’t do that, it lets the pipeline finish before restarting it, and looking at the comments in the source code, it seems to be a fairly recent regression and not the originally intended behaviour.

Darktable has its own GUI widgets library, for sliders and comboboxes (aka drop-down menus or selection boxes), called Bauhaus (in the source code, it’s in src/bauhaus/bauhaus.c). While they use Gtk as a backend, Bauhaus are custom objects. And like many things in Darktable, custom equals rotten.

In 2022, I noticed parasite redrawings and lags , when using them, leading to a frustrating user experience : the widget redrawing seemed to wait for pipeline recomputations to complete, which meant that users were not really sure their value change was recorded, which could lead them to try again, starting another cycle of expensive recomputation, and effectively freezing their computer for several very frustrating minutes of useless intermediate pipeline recomputations.

Rawspeed (the library providing the decoders for camera raw files) has deprecated support for GCC < 12. As a result, I can no longer build the AppImage on Ubuntu 20.04 (using Github runners) but I have to build it on 22.04.

It means any Linux distribution having libc older than 2.35 will not be able to start the new AppImages starting today. That should not affect most users running distributions upgraded in 2021 or more recently. Ubuntu 20.04 and other LTS/old stable distributions (Debian stable) may be affected.

The scene-referred workflow promises an editing independent from the output medium. It will typically produce an image encoded in sRGB colorspace with 8 bits, that is code values between 0 and 255. To simplify, we will consider here only the 8 bits case. Concepts are the same in 16 bits, only the coding range goes from 0 to 65535, which is anecdotal.

Unfortunately, nothing guarantees that the printer is able to use the whole encoding range. The minimum density (Dmin in analog) is reached with naked paper, and matches an RGB code value 255. The maximum density (Dmax in analog) is reached with 100% ink coverage.1 Problem is, if Dmin matches an RGB code value of 255, Dmax never matches an RGB value of 0.

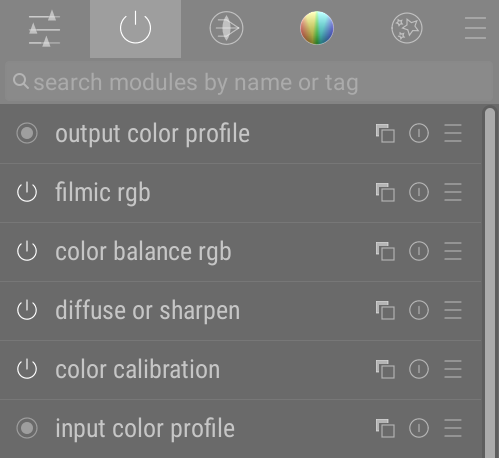

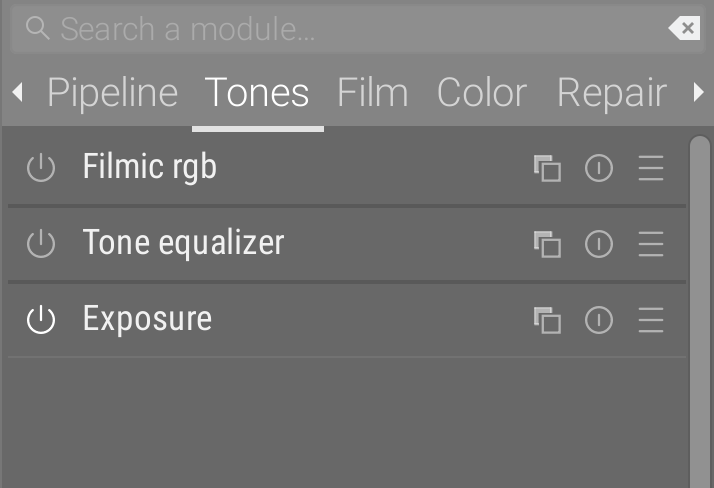

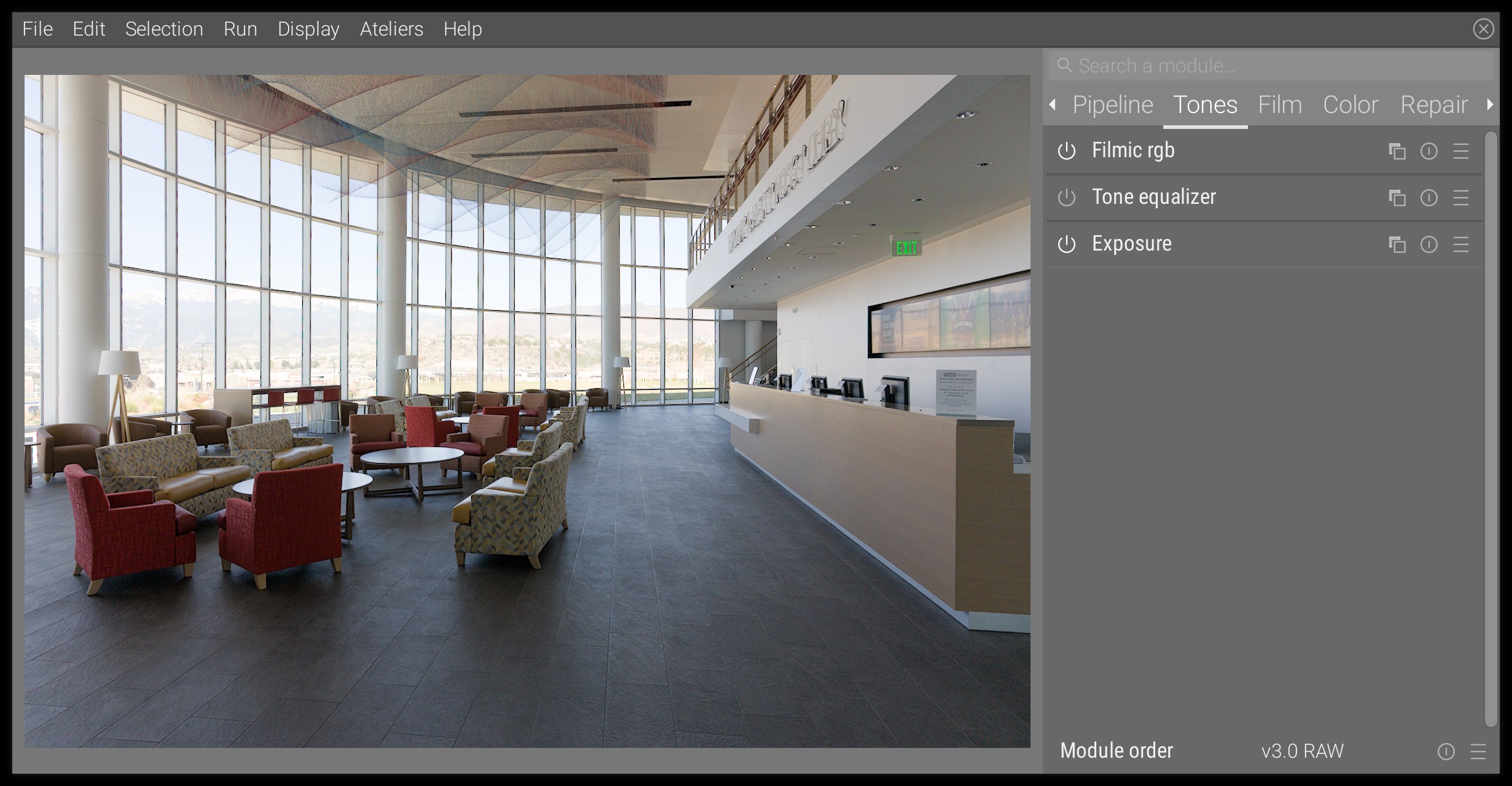

If you come from Darktable, you may be used to this in the darkroom:

while Ansel offers you this:

This is no accident, and it’s time to explain why, and why this will not be extended with customization options.

I accidentally discovered that the Linux build script used a “package” build, meaning the CPU optimizations are limited to generic ones in order to produce portable binaries that can be installed on any x86-64 platform. By “using”, I mean the package build was not explicitely disabled, so it was enabled by default.

Anyway, this is now disabled by default, since the actual packages (.exe and .appimage) are not built through that script, which is primarily meant to help end-users. To get the previous behaviour back, you would need to run:

2022 was so bad in terms of junk emails and noise that I started the Virtual Secretary , a Python framework to write intelligent email filters by crossing information between several sources to guess what incoming emails are and whether they are important/urgent or not. When I’m talking about junk emails, it’s also Github notifications, pings on pixls.us (thank God I closed my account on that stupid forum), YouTube, and direct emails from people hoping to get some help in private.

Here is how to get started with Ansel editing, going through only the most basic steps that should serve you well most of the time.

The video was recorded on Darktable 3, but the same modules and principles apply to Ansel.

This article will demonstrate how to perform monochrome toning on digital images in Ansel, to emulate the color rendition of cyanotypes, platinotypes, sepia and split-toning developments.

Set the global exposure and filmic scene white and scene black, as in any other editing. See basic editing steps. This is our base image, by Glenn Butcher :

It’s been roughly 3 months that I rebranded “R&Darktable” (that nobody seemed to get right), into “Ansel”, then bought the domain name and created the website from scratch with Hugo (I had never programmed in Golang before, but it’s mostly template code).

Then I spent a total 70 h on making the nightly packages builds for Windows and Linux work for continuous delivery, something that Darktable never got right (“you can build yourself, it’s not difficult”), only to see the bug tracker blow up after release (nothing better than chaining the pre-release sprint with a post-release one to reduce your life expectancy).

What happens when a gang of amateur photographers, turned into amateur developers, joined by a bunch of back-end developers who develop libraries for developers, decide to work without method nor structure on an industry software for end-users, which core competency (colorimetry and psychophysics) lies somewhere between a college degree in photography and a master’s degree in applied sciences, while promising to deliver 2 releases each year without project management ? All that, of course, in a project where the founders and the first generation of developers moved on and fled ?

In this article, you will learn what the scene-referred workflow is, how Ansel uses it and why it benefits digital image processing at large.

The scene-referred workflow is the backbone of the Ansel’s imaging pipeline. It is a working logic that comes from the cinema industry, because it is the only way to achieve robust, seamless compositing (also known as alpha blending) of layered graphics, upon which movies rely heavily to blend computer-generated special effects into real-life footage. For photographers, it is mostly for high dynamic range (HDR) scenes (backlit subject, sunsets, etc.) that it proves itself useful.

You can also ask Chantal, the AI search engine.

(section) •(summary)